JNode handbook

This book contains all JNode documentation.

Goals

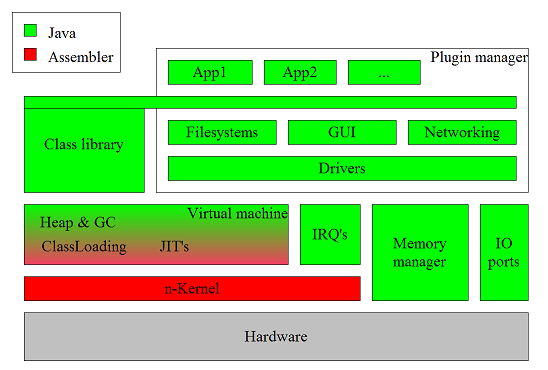

JNode is a Java New Operating System Design Effort.

The goal is to create an easy to use and install Java operating system for personal use. Any java application should run on it, fast & secure!

User guide

General user information.

History

Already very early in the Java history, around JDK 1.0.2, Ewout Prangsma (the founder of JNode) dreamed of building a Java Virtual Machine in Java.

It should be a system that was not only a VM, but a complete runtime environment that does not need any other form of operating system. So is had to be a light weight and most important flexibel system.

Ewout made various attempts in achieving these goals, starting with JBS; the Java Bootable System. It became a somewhat functional system, but had far too much native code (C and assembler) in it. So he started working on a new JBS system, called JBS2 and finally JNode. It had with a simple target, using NO C code and only a little bit of assembly code.

In may on 2003 Ewout came public with JNode and development proceeded ever faster from that point on.

Several versions have been released and there are now concrete plans for the first major version.

Application testing list

This page lists java applications that we use to test JNode.

- (Should) work

- Partially usable

- Starts but doesn't really work yet

- empty

- Needs work

- Needs (lots of) work

- Not tested yet

Applications starting with A

Here is details about applications whose name starts with A

- Ant

website : http://ant.apache.org/

comments :

Applications starting with B

Here is details about applications whose name starts with B

- Beanshell

website : http://www.beanshell.org/

comments :

Applications starting with E

Here is details about applications whose name starts with E

- ecj (eclipse compiler for java)

website : http://www.eclipse.org ???

comments :

Applications starting with H

Here is details about applications whose name starts with H

- hsqldb

website : http://hsqldb.org/

comments :

Applications starting with J

Here is details about applications whose name starts with J

- JUnit

- javac

- Run "gc".

- Run "javac" with no arguments.

- Run "gc" again.

- Use "javac" to compile a (small) program.

- Jetty6

- JEdit

- JChatIRC

website : http://junit.org/

comments : only tested in console mode.

website : http://openjdk.java.net/

comments : the sun compiler for java. It works fine, but you can run into GC bugs when you compile your first program. The following "warm-up" sequence avoids this:

website : http://www.mortbay.org/

comments : it works partially

website : http://www.jedit.org/

comments : by using jedit.jar alone, I only see the splash screen. If I try the installer, it fails at 0% of progress with an "IOException" dialog box but no stacktrace.

website : http://jchatirc.sourceforge.net/

comments :

Applications starting with N

Here is details about applications whose name starts with N

- NanoHttpd

website : http://elonen.iki.fi/code/nanohttpd/

comments :

Applications starting with R

Here is details about applications whose name starts with R

- Rhino

website : http://www.mozilla.org/rhino/

comments :

Applications starting with T

Here is details about applications whose name starts with T

- tomcat

website : http://tomcat.apache.org/

comments :

Getting Started

To start using JNode you have two options:

Getting the latest released CD-ROM image

- Download the bootable CDROM image from here.

- Unzip it

- Burn it to CD-ROM

- Boot a test PC from it or start in in VMWare

Getting the latest sources and building them

- Checkout the jnode module from GitHub. See the GitHub jnode repository page for details.

- Build JNode using the build.bat (on windows) or build.sh (on unix) script.

Without parameters, these scripts will give all build options. You probably want to use the cd-x86 option that builds the CD-ROM image. - Boot a test PC from it or start in in VMWare

Getting nightly builds

- Download the nightly-builds

- You can also get the sources and a ready-to-use vmx file for VMWare

Running JNode

Once JNode has booted, you will see a JNode > command prompt. See the shell reference for available commands.

The 20 minute guided tour.

This is a quick guide to get started with JNode. It will help you to download a JNode boot image, and explain how to use it. It also will give you get you started with exploring JNode's capabilities and give you some tips on how to use the JNode user interfaces.

To start with, you need to download a JNode boot image. Go to this page and click on the link for the latest version. This will take you to a page with the downloadable files for the version. The page also has a link to a page listing the JNode hardware requirements.

At this point, you have two choices. You can create a bootable CD ROM and then run JNode on real hardware by booting your PC from the CD ROM drive. Alternatively, you can run JNode on virtual PC using VMWare.

To run JNode on real hardware:

- Download the "gzip compressed ISO image" from the JNode download page.

- Uncompress the ISO image using "gunzip".

- Use your favorite CD burning software to burn the ISO image onto a blank CD ROM.

- Shutdown your PC.

- Put the JNode boot CD into the CD drive

- Boot from the CD, following the PC manufacturer's instructions.

To run JNode from VMWare:

- Download a free copy of VMware-Player. (You can also use the free VMware-Server which allows to modify the VM parameters and so on, or you buy one of the more advanced VMWare products.)

- Install VMWare following the instructions provided.

- Download the "gzip compressed ISO image" and the "vmx" file from the JNode download page.

- Uncompress the ISO image using "gunzip", and make sure that the image is in the same directory as the "vmx" file.

- Launch VMWare, browse to find the JNode "vmx" file, and launch it as described in the VMWare user guide.

When you start up JNode, the first thing you will see after the BIOS messages is the Grub bootloader menu allowing you to select between various JNode configurations. If you have at 500MB or more of RAM (or 500MB assigned to the VM if you are using VMware), we recommend the "JNode (all plugins)" configuration. This allows you to run the GUI. Otherwise, we recommend the "JNode (default)" or "JNode (minimal shell)" configurations. (For more information on the available JNode configurations, ...).

Assuming that you choose one of the recommended configurations, JNode will go through the bootstrap sequence, and start up a text console running a command shell, allowing you to issue commands. The initial command will look like this:

JNode />

Try a couple of commands to get you started:

JNode /> dir

will list the JNode root directory,

JNode /> alias

will list the commands available to you, and

JNode /> help <command>

will show you a command's online help and usage informatiom.

There are a few more useful things to see:

- Entering ALT+F7 (press the ALT and F7 keys at the same time) will switch to the logger console. Entering ALT+F1 switches you back to the shell console.

- Entering SHIFT+UP-ARROW and SHIFT+DOWN-ARROW scroll the current console backwards and forwards.

- Entering TAB performs command name and argument completion.

- Entering UP-ARROW and DOWN-ARROW allows you to choose commands from the command history.

The JNode completion mechanism is more sophisticated than the analogs in typical Linux and Windows shells. In addition to performing command name and file name completion, it can do completion of command options and context sensitive argument completion. For example, if you want to set up your network card using the "dhcp" command, you don't need to go hunting for the name of the JNode network device. Instead, enter the following:

JNode /> dhcp eth<TAB>

The completer will show a list of known ethernet devices allowing you to select the appropriate one. In this case, there is typically one name only, so this will be added to the command string.

For more information on using the shell, please refer to the JNode Shell page,

I bet you are bored with text consoles by now, and you are eager to see the JNode GUI. You can start it as follows:

JNode /> gc

JNode /> startawt

The GUI is intended to be intuitive, so give it a go. It currently includes a "Text Console" app for entering commands, and a couple of games. If you have problems with the GUI, ALT+F12 should kill the GUI and drop you back to the text console.

By the way, you can switch the font rendering method used by the GUI before you run "startawt", as follows:

JNode /> set jnode.font.renderer ttf|bdf

If you have questions or you just want to talk to us, please consider joining our IRC channel (#JNode.org@irc.oftc.net). We're all very friendly and try to help everyone *g*

If you find a bug, we would really appreciate you posting a bug report via our bug tracker. You can also enter support and feature requests there.

Feel free to continue trying out JNode. If you have the time and the skills, please consider joining us to make it better.

Booting from the network

2 options are available here

Network boot disk

If you do not have a bootable network card, you can create a network boot disk instead. See the GRUB manual for details, or use ROM-o-matic or the GRUB network boot-disk creator.

NIC-based network boot

To boot JNode from the network, you will need a bootable network card, a DHCP/BOOTP and TFTP server setup.

- Set the TFTP base directory to <JNode dir>/all/build/x86/netboot

- Set the boot file to nbgrub-<card> where <card> is the card you are using

- Set DHCP option 150 to (nd)/menu-nb.lst

Booting from USB memory stick

This guide shows you how to boot JNode from an USB memory stick.

You'll need a Windows machine to build on and a clean USB memory stick (it may be wiped!).

Step 1: Build a JNode iso image (e.g. build cd-x86-lite)

Step 2: Download XBoot.

Step 3: Run XBoot with Administrator rights

Step 4: Open file: select the ISO created in step 1. Choose "Add grub4dos using iso emulation".

Step 5: Click "Create USB"

XBoot will now install a bootloader (choose the default) and prepare the USB memory stick.

After then, eject the memory stick and give it a try.

When it boots, you'll first have to choose JNode from the menu. Then the familiar JNode Grub boot menu appears.

Eclipse Howto

This chapter explains how to use the Eclipse 3.2 IDE with JNode.

Starting

JNode contains several Eclipse projects within a single SVN module. To checkout and import these projects into Eclipse, execute the following steps:

- Checkout the jnode module from SVN using any SVN tool you like.

Look at the sourceforge SVN page for more details. - Start Eclipse

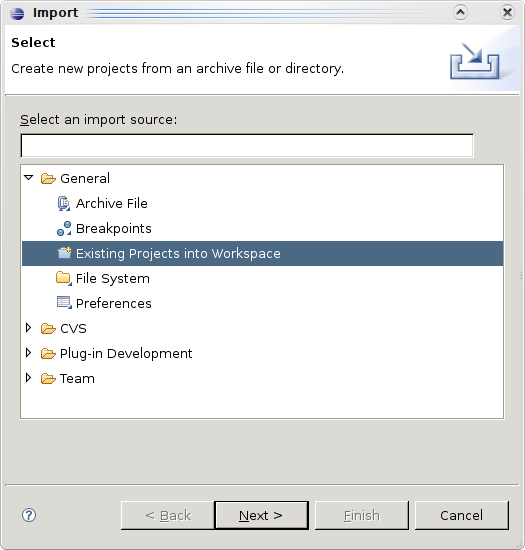

- Select File - Import. You wil get this screen.

- Select "Existing project into workspace".

- Enter the root directory of the imported jnode CVS module in this screen.

The listed projects will appear when the root directory has been selected.

- Select Finish

- You will end up with all projects into your Eclipse workspace like this:

Building

You can build JNode within Eclipse by using the build.xml Ant file found in the JNode-All project. However, due to the memory requirements of the build process, it is better to run the build from the commandline using build.bat (on windows) or build.sh (on unix).

Running in Bochs

Running JNode in Bochs does not seem to work out of the box yet. It fails on setting CPU register CR4 into 4Mb paging mode.

A compile setting that enables 4Mb pages is known to solve this problem. To enable this settings, running configure with the --enable-4meg-pages argument or add #define BX_SUPPORT_4MEG_PAGES 1 to config.h.

Running in KVM

Setup

If you have a CPU with hardware virtualization support you can run JNode in kvm wich is much faster than vmware-[player|server] (at least for me). You need a CPU that either supports Intel's IVT (aka Vanderpool) or AMD's AMD-V (aka Pacifica).

With

egrep '^flags.*(vmx|svm)' /proc/cpuinfo"

you can easily check if your CPU supports VT or not. If you receive output your CPU is supported, else it is not. If your CPU is supported also check that VT is enabled in your system bios.

Load the kvm modules matching your CPU, either "modprobe kvm_intel" or "modprobe kvm_amd", install kvm user tools and setup permissions so users may run kvm (Have a look at a HOWTO for your distro for details: Ubuntu, Gentoo).

Once you have setup everything you can start kvm from the commandline (I think there are GUI frontends too, but I'm using the command line). You should be carefull though, acpi in JNode seems to kill kvm, so allways disable acpi. I also had to deactivate the kvm-irqchip as it trashed JNode. The command that works for me is:

kvm -m 768 -cdrom jnode-x86-lite.iso -no-acpi -no-kvm-irqchip -serial stdout -net none -std-vga

The "-serial" switch is optional but I need it for kdb (kernel debugger). If you want to use the Vesa mode of JNode you should also use "-std-vga", overwise you will not have a vesa mode. Set the memory whatever you like (768MB is my default).

Things that still need to be tested:

Running in parallels

I found an only way to run JNode with parallels.

In Options->Emulation flags, there is a parameter called Acceleration level that takes 3 values :

- disabled : JNode will work but that's very slow

- normal : JNode won't boot (freeze at "Detected 1 processor")

- high : JNode won't boot (freeze at "Detected 1 processor")

Running in VBox

You can now run JNode on virtual box too. ACPI is not working but you'll get a prompt and can use JNode.

TODO: Test network, usb,...

Running in Virtual PC

This page will discripe how to run JNode in Virtual PC

At the current state JNode doesn't run in Virtual PC.

Running in VMWare

Basic Procedure

The JNode build process creates a VMWare compatible ".vmx" file that allows you to run JNode using recent releases of VMWare Player.

Assuming that you build JNode using the "cd-x86-lite" target, the build will create an ISO format CDROM image called "jnode-x86-lite.iso" in the "all/build/cdroms/" directory. In the same directory, the build also generates a file called "jnode-x86-lite.iso.vmx" for use with VMWare. To boot JNode from this ".iso" file, simply run the player as follows:

$ vmplayer all/build/cdroms/jnode-x86-lite.iso.vmx

Altering the VMWare virtual machine configuration

By default, the generated ".vmx" file configures a virtual machine with a virtual CDROM for the ISO, a bridged virtual ethernet and a virtual serial port that is connected to a "debugger.txt" file. If you want to configure the VMWare virtual machine differently, the simplest option is to buy VMWare Workstation and use its graphical interfaces to configure and run JNode. (Copy the generated ".vmx" file to a different location, and use that as the starting point.)

If you don't want to pay for VMWare Workstation, you can achieve the same effect by changing the ".vmx" file by hand. However, changes you make that way will be clobbered next time you run the "build" script. The solution is to do the following:

This procedure assumes some changes in a patch that is waiting to be committed.

- Create a file containing the VMX properties that you want to add or replace. Put this file somewhere that won't be clobbered by "build clean".

- Copy the "jnode.properties.dist" file to "jnode.properties".

- Open the "jnode.properies" file in a text editor.

- Find the line that starts "#vmware.vmx.overrides=".

- Remove the "#" and replace the characters after the "=" with the override file's pathname.

- Save the file.

- Run "build cd-x86-lite" (or whatever you normally use to build a CDROM image).

This should create the "jnode-x86-lite.iso.vmx" file with the VMX settings from your file as overrides to the default settings.

Unfortunately, VMWare have not released any documentation for the myriad VMX settings. The best 3rd-party documentation that I can find is the sanbarrow.com website. There are also various "builder" applications around, but they don't look all that good.

VMWare disks and Boot device order

If you add a VMWare virtual (or real) disk drive, the VMWare virtual machine's BIOS will try to boot from that drive. Unless you have set up the drive to be bootable, this won't work. The easy way to fix this is to change VMWare's BIOS settings to alter the boot device order.

- Run vmplayer as above.

- Quickly click the VMWare window to give it keyboard focus and hit [F2]. (You have small window to do this ... just a second or two!).

- In the BIOS settings screen, select the "Booting" panel and move the "cdrom" to the top; i.e. the first device to be tried.

- Save the BIOS settings to the virtual NVRAM and exit the BIOS settings editor to continue the boot.

By default the NVRAM settings are stored in the "JNode.nvram" file in "all/build/cdroms" directory, and will be clobbered when you run "build clean". If this is a problem, create a VMX override (see above) with a "nvram" entry that uses a different location for the file.

Running on a PC

To run JNode on a PC using the bootable CDROM, your PC must comply with the following specifications:

- Pentium or above CPU

128Mb RAM or more (i'm not sure what the minimum is) - Bootable CDROM drive that supports El-Torito harddisk emulation

- VGA compatible video card

- Keyboard

The GRUB Boot Menu

The first JNode related information you will see after booting from a JNode CDROM image is the GRUB bootloader page. The GNU GRUB bootloader is responsible for selecting a JNode configuration from a menu, loading the corresponding kernel image and parameters into RAM and causing the JNode kernel to start executing.

When GRUB starts, it displays the bootloader page and pauses for a few seconds. If you do nothing, GRUB will automatically load and start the default configuration. Pressing any key at this point will interrupt the automatic boot sequence and allow you to select a specific configuration. You can use the UP-ARROW and DOWN-ARROW to choose a JNode configuration, then hit ENTER to boot it up.

There are a number of JNode configurations in the menu:

- The configurations that include "MP" in the description enable JNode's multiprocessor support on a capable machine. Since JNode's multiprocessor support is not fully functional, you should probably avoid these configurations for now.

- The configurations the include "GUI" in the description will start up the JNode GUI. Configurations without this tag will start up the JNode command shell on a text console.

- The other difference between the configurations is in the sets of plugins that they load. In general, loading more plugins will cause JNode to use more RAM.

It is currently not a good idea to boot JNode straight to the GUI. If want to run the GUI, it is best to boot the one of the non-GUI configurations; typically "JNode (all plugins)". Then from the text console, run the following commands:

JNode /> gc

JNode /> startawt

Hardware Compatibility List

Use this list to find out if JNode already supports your hardware.

If you find out that your device is not on the list, or the information provided here is incorrect, please submit

your changes.

Hardware requirements

To be able to run JNode, you're hardware should be at least equal to or better then:

- Pentium class CPU with Page Size Extensions (PSE) feature

- 512MB RAM

In order to run JNode the following hardware is recommended:

- Pentium i3 or better

- 1GB RAM

- CDROM drive

- Modern VGA card (see devices)

JNode Commands

This page contains the available documentation for most of the useful JNode commands. For commands not listed below, try running help <alias> at the JNode command prompt to get the command's syntax description and built-in help. Running alias will list all available command aliases.

If you come across a command that is not documented, please raise an issue. (Better still, if you have website content authoring access, please add a page yourself using one of the existing command pages as a template.)

acpi

acpi

| Synopsis | ||

| acpi | displays ACPI details | |

| acpi | --dump | -d | lists all devices that can be discovered and controlled through ACPI |

| acpi | --battery | -b | displays information about installed batteries |

|

Details | ||

|

The acpi command currently just displays some information that can be gleaned from the host's "Advanced Configuration & Power Interface" (ACPI). In the future we would like to interact with ACPI to do useful things. However, this appears to be a complex topic, rife with compatibility issues, so don't expect anything soon.

The ACPI specifications can be found on the net, also have a look at wikipedia. |

||

|

Bugs | ||

| This command does nothing useful at the moment; it is a work in progress. | ||

alias

alias

| Synopsis | ||

| alias | prints all available aliases and corresponding classnames | |

| alias | <alias> <classname> | creates an alias for a given classname |

| alias | -r <alias> | removes an existing alias |

|

Details | ||

|

The alias command creates a binding between a name (the alias) and the fully qualified Java name of the class that implements the command. When an alias is created, no attempt is made to check that the supplied Java class name denotes a suitable Java class. If the alias name is already in use, alias will update the binding.

If the classname argument is actually an existing alias name, the alias command will create a new alias that is bound to the same Java classname as the existing alias. A command class (e.g. one whose name is given by an aliased classname) needs to implement an entry point method with one of the following signatures:

If a command class has both execute and main methods, most invokers will use the former in preference to the latter. Ideally, a command class should extend org.jnode.shell.AbstractCommand. |

||

arp

arp

| Synopsis | ||

| arp | prints the ARP cache | |

| arp | -d | clears the ARP cache |

|

Details | ||

|

ARP (the Address Resolution Protocol) is a low level protocol for discovering the MAC address of a network interface on the local network. MAC address are the low-level network addresses for routing IP (and other) network packets on a physical network.

When a host needs to comminutate with an unknown local IP address, it broadcasts an ARP request on the local network, asking for the MAC address for the IP address. The node with the IP address broadcasts a response giving the MAC address for the network interface corresponding to the IP address. The ARP cache stores IP to MAC address mappings that have previously been discovered. This allows the network stack to send IP packets without repeatedly broadcasting for MAC addresses. The arp command allows you to examine the contents of the ARP cache, and if necessary clear it to get rid of stale entries. |

||

basename

basename

| Synopsis |

| basename String [Suffix] |

| Details |

| Strip directory and suffix from filenames |

| Compatibility |

| JNode basename is posix compatible. |

| Links |

beep

beep

| Synopsis | ||

| beep | makes a beep noise | |

|

Details | ||

| Useful for alerting the user, or annoying other people in the room. | ||

bindkeys

bindkeys

| Synopsis | ||

| bindkeys | print the current key bindings | |

| bindkeys | --reset | reset the key bindings to the JNode defaults |

| bindkeys | --add <action> (<vkSpec> | <character>) | add a key binding |

| bindkeys | --remove <action> [<vkSpec> | <character>] | remove a key binding |

|

Details | ||

|

The bindkeys command prints or changes the JNode console's key bindings; i.e. the mapping from key events to input editing actions. The bindkeys form of the command prints the current bindings to standard output, and the bindkeys --reset form resets the bindings to the hard-wired JNode default settings.

The bindkeys --add ... form of the command adds a new binding. The <action> argument specifies an input editing action; e.g. 'KR_ENTER' causes the input editor to append a newline to the input buffer and 'commit' the input line for reading by the shell or application. The <vkSpec> or <character> argument specifies an input event that is mapped to the <action>. The recognized <action> values are listed in the output the no-argument form of the bindkeys command. The <vkSpec> values are formed from the "VK_xxx" constants defined by the "java.awt.event.KeyEvent" class and "modifier" names; e.g. "Shift+VK_ENTER". The <character> values are either single ASCII printable characters or standard ASCII control character names; e.g. "NUL", "CR" and so on. The bindkeys --add ... form of the command removes a single binding or (if you leave out the optional <vkSpec> or <character> argument) all bindings for the supplied <action>. |

||

|

Bugs | ||

|

Changing the key bindings in one JNode console affects all consoles.

The bindkeys command provides no online documentation for what the action codes mean / do. |

||

bootp

bootp

| Synopsis | ||

| bootp | <device> | configures a network interface using BOOTP |

|

Details | ||

| The bootp command configures the network interface given by <device> using settings obtained using BOOTP. BOOTP is a network protocol that allows a host to obtain its IP address and netmask, and the IP of the local gateway from a service on the local network. | ||

bsh

bsh

| Synopsis | ||

| bsh | [ --interactive | -i ] [ --file | -f <file> ] [ --code | -c <code> ] | Run the BeanShell interpreter |

| Details | ||

The bsh command runs the BeanShell interpreter. The options are as follows:

If no arguments are given, --interactive is assumed. |

||

bzip2

bzip2

| Synopsis |

|

bzip2 [Options] [File ...] bunzip2 [Options] [File ...] bzcat [File ...] |

| Details |

| The bzip2 program handles compression and decompression of files in the bzip2 format. |

| Compatibility |

| JNode bzip2 aims to be fully compatible with BZip2. |

| Links |

cat

cat

| Synopsis | ||

| cat | copies standard input to standard output | |

| cat | <filename> ... | copies files to standard output |

| cat | --urls | -u <url> ... | copies objects identified by URL to standard output |

|

Details | ||

The cat command copies data to standard output, depending on the command line arguments:

The name "cat" is borrowed from UNIX, and is short for "concatenate". |

||

|

Bugs | ||

| There is no dog command. | ||

cd

cd

| Synopsis | ||

| cd | [ <dirName> ] | change the current directory |

|

Details | ||

|

The cd command changes the "current" directory for the current isolate, and everything running within it. If a <dirName> argument is provided, that will be the new "current" directory. Otherwise, the current directory is set to the user's "home" directory as given by the "user.home" property.

JNode currently changes the "current" directory by setting the "user.dir" property in the system properties object. |

||

|

Bugs | ||

| The global (to the isolate) nature of the "current" directory is a problem. For example, if you have two non-isolated consoles, changing the current directory in one will change the current directory for the other, | ||

class

class

| Synopsis | ||

| class | <className> | print details of a class |

|

Details | ||

| The class command allows you to print some details of any class on the shell's current classpath. The <className> argument should be a fully qualified Java class name. Running class will cause the named class to be loaded if this hasn't already happened. | ||

classpath

classpath

| Synopsis | ||

| classpath | prints the current classpath | |

| classpath | <url> | adds the supplied url to the end of the classpath |

| classpath | --clear | clears the classpath |

| classpath | --refresh | cause classes loaded from the classpath to be reloaded on next use |

|

Details | ||

|

The classpath command controls the path that the command shell uses to locate commands to be loaded. By default, the shell loads classes from the currently loaded plug-ins. If the shell's classpath is non-empty, the urls on the path are searched ahead of the plug-ins. Each shell instance has its own classpath.

If the <url> argument ends with a '/', it will be interpreted as a base directory that may contain classes and resources. Otherwise, the argument is interpreted as the path for a JAR file. While "file:" URLs are the norm, protocols like "ftp:" and "http:" should also work. |

||

clear

clear

| Synopsis | ||

| clear | clear the console screen | |

|

Details | ||

| The clear command clears the screen for the current command shell's console. | ||

compile

compile

| Synopsis | ||

| compile | [ --test ] [ --level <level> ] <className> | compile a class to native code |

|

Details | ||

|

The compile command uses the native code compiler to compile or recompile a class on the shell's class path. The <className> argument should be the fully qualified name for the class to be compiled

The --level option allows you to select the optimization level. The --test option allows you to compile with the "test" compilers. This command is primarily used for native code compiler development. JNode will automatically run the native code compiler on any class that is about to be executed for the first time. |

||

console

console

| Synopsis | ||

| console | --list | -l | list all registered consoles |

| console | --new | -n [--isolated | --i] | starts a new console running the CommandShell |

| console | --test | -t | starts a raw text console (no shell) |

|

Details | ||

|

The console command lists the current consoles, or creates a new one.

The first form of the console command list all consoles registered with the console manager. The listing includes the console name and the "F<n>" code for selecting it. (Use ALT F<n> to switch consoles.) The second form of the console command starts and registers a new console running a new instance of CommandShell. If the --isolate option is used with --new, the new console's shell will run in a new Isolate. The last form of the console command starts a raw text console without a shell. This is just for testing purposes. |

||

cpuid

cpuid

| Synopsis | ||

| cpuid | print the computer's CPU id and metrics | |

|

Details | ||

| The cpuid command prints the computer's CPU id and metrics to standard output. | ||

date

date

| Synopsis | ||

| date | print the current date | |

|

Details | ||

| The date command prints the current date and time to standard output. The date / time printed are relative to the machine's local time zone. | ||

|

Bugs | ||

|

A fixed format is used to output date and times.

Printing date / time values as UTC is not supported. This command will not help your love life. |

||

del

del

| Synopsis | ||

| del | [ -r | --recursive ] <path> ... | delete files and directories |

| Details | ||

|

The del command deletes the files and/or directories given by the <path> arguments.

Normally, the del command will only delete a directory if it is empty apart from the '.' and '..' entries. The -r option tells the del command to delete directories and their contents recursively. |

||

device

device

| Synopsis | ||

| device | shows all devices | |

| device | <device> | shows a specific device |

| device | ( start | stop | restart | remove ) <device> | perform an action on a device |

|

Details | ||

|

The device command shows information about JNode devices and performs generic management actions on them.

The first form of the device command list all devices registered with the device manager, showing their device ids, driver class names and statuses. The second form of the device command takes a device id given as the <device> argument. It shows the above information for the corresponding device, and also lists all device APIs implemented by the device. Finally, if the device implements the "DeviceInfo" API, it is used to get further device-specific information. The last form of the device command performs actions on the device denoted by the device id given as the <device> argument. The actions are as follows:

|

||

|

Bugs | ||

| This command does not allow you to perform device-specific actions. | ||

df

df

| Synopsis | ||

| df | [ <device> ] | display disk space usage info |

|

Details | ||

| The df command prints disk space usage information for file systems. If a <device>, usage information is displayed for the file system on the device. Otherwise, information is displayed for all registered file systems. | ||

dhcp

dhcp

| Synopsis | ||

| dhcp | <device> | configures a network interface using DHCP |

|

Details | ||

|

The dhcp command configures the network interface given by <device> using settings obtained using DHCP. DHCP is the most commonly used network configuration protocol. The protocol provides an IP address and netmask for the machine, and the IP addresses of the local gateway and the local DNS service.

DHCP allocates IP address dynamically. A DHCP server will often allocate the same IP address to a given machine, but this is not guaranteed. If you require a fixed IP address for your JNode machine, you should use bootp or ifconfig. (And, if you have a DHCP service on your network, you need to configure it to not reallocate your machine's staticly assigned IP address.) |

||

dir

dir

| Synopsis | ||

| dir | [ <path> ] | list a file or directory |

| Details | ||

| The dir command lists the file or directory given by the <path> argument. If no argument is provided, the current directory is listed. | ||

dirname

dirname

| Synopsis |

| dirname String |

| Details |

| Strip non-directory suffix from file names |

| Compatibility |

| JNode dirname is posix compatible. |

| Links |

disasm

disasm

| Synopsis | ||

| disasm | [ --test ] [ --level <level> ] <className> [ <methodName> ] | disassemble a class or method |

|

Details | ||

|

The disasm command disassembles a class or method for a class on the shell's class path The <className> argument should be the fully qualified name for the class to be compiled. The <methodName> should be a method declared by the class. If the method is overloaded, all of the overloads will be disassembled.

The --level option allows you to select the optimization level. The --test option allows you to compile with the "test" compilers. This command is primarily used for native code compiler development. Note, contrary to its name and description above, the command doesn't actually disassemble the class method(s). Instead it runs the native compiler in a mode that outputs assembly language rather than machine code. |

||

echo

echo

| Synopsis | ||

| echo | [ <text> ... ] | print the argument text |

|

Details | ||

| The echo command prints the text arguments to standard output. A single space is output between the arguments, and text is completed with a newline. | ||

edit

edit

| Synopsis | ||

| edit | <filename> | edit a file |

|

Details | ||

|

The edit command edits a text file given by the <filename> argument. This editor is based on the "charva" text forms system.

The edit command displays a screen with two parts. The top part is the menu section; press ENTER to display the file action menu. The bottom part is the text editing window. The TAB key selects menu entries, and also moves the cursor between the two screen parts. |

||

|

Bugs | ||

| This command needs more comprehensive user documentation. | ||

eject

eject

| Synopsis | ||

| eject | [ <device> ... ] | eject a removable medium |

| Details | ||

| The eject command ejects a removable medium (e.g. CD or floppy disk) from a device. | ||

env

env

| Synopsis | ||

| env | [ -e | --env ] | print the system properties or environment variables |

|

Details | ||

|

By default, the env command prints the system properties to standard output. The properties are printed one per line in ascending order based on the property names. Each line consists of a property name, the '=' character, and the property's value.

If the -e or --env option is given, the env command prints out the current shell environment variables. At the moment, this only works with the bjorne CommandInterpreter and the proclet CommandInvoker. |

||

exit

exit

| Synopsis | ||

| exit | cause the current shell to exit | |

|

Details | ||

| The exit command causes the current shell to exit. If the current shell is the JNode main console shell, JNode will shut down. | ||

|

Bugs | ||

| This should be handled as a shell interpreter built-in, and it should only kill the shell if the user runs it directly from the shell's command prompt. | ||

gc

gc

| Synopsis | ||

| gc | run the garbage collector | |

|

Details | ||

|

The gc command manually runs the garbage collector.

In theory, it should not be necessary to use this command. The garbage collector should run automatically at the most appropriate time. (A modern garbage collector will run most efficiently when it has lots of garbage to collect, and the JVM is in a good position to know when this is likely to be.) In practice, it is necessary to run this command:

|

||

grep

grep

| Synopsis |

| grep [Options] Pattern [File ...] |

| grep [Options] [ -e Pattern | -f File ...] [File ...] |

| Details |

| grep searches the input Files (or standard input if not files are give, or if - is given as a file name) for lines containing a match to the Pattern. By default grep prints the matching lines. |

| Compatibility |

|

JNode grep implements most of the POSIX grep standard JNode grep implements most of the GNU grep extensions |

| Links |

| Bugs |

|

gzip

gzip

| Synopsis |

|

gzip [Options] [-S suffix] [File ...] gunzip [Options] [-S suffix] [File ...] zcat [-f] [File ...] |

| Details |

| The gzip program handles the compress and decompression of files in the gzip format. |

| Compatibility |

| JNode gzip aims to be fully compatible with gnu zip. |

| Links |

halt

reboot

| Synopsis | ||

| halt | shutdown and halt JNode | |

|

Details | ||

| The halt command shuts down JNode services and devices, and puts the machine into a state in which it is safe to turn off the power. | ||

help

help

| Synopsis | ||

| help | [ <name> ] | print command help |

|

Details | ||

|

The help command prints help for the command corresponding to the <name> argument. This should be either an alias known to the current shell, or a fully qualified name of a Java command class. If the <name> argument is omitted, this command prints help information for itself.

Currently, help prints command usage information and descriptions that it derives from a command's old or new-style argument and syntax descriptors. This means that (unlike Unix "man" for example), the usage information will always be up-to-date. No help information is printed for Java applications which have no JNode syntax descriptors. |

||

hexdump

hexdump

| Synopsis | ||

| hexdump | <path> | print a hex dump of a file |

| hexdump | -u | --url <url> | print a hex dump of a URL |

| hexdump | print a hex dump of standard input | |

| Details | ||

| The hexdump command prints a hexadecimal dump of a file, a URL or standard input. | ||

history

history

| Synopsis | ||

| history | print the history list | |

| history | [-t | --test] <index> | <prefix> | find and execute a command from the history list |

|

Details | ||

|

The history command takes two form. The first form (with no arguments) simply prints the current command history list. The list is formatted with one entry per line, with each line starting with the history index.

The second form of the history command finds and executes a command from the history list and executes it. If an <index> "i" is supplied, the "ith" entry is selected, with "0" meaning the oldest entry, "1" the second oldest and so on. If a <prefix> is supplied, the first command found that starts with the prefix is executed. The --test (or -t) flag tells the history command to print the selected command instead of executing it. |

||

| Bugs | ||

|

The history command currently does not execute the selected command. This is maybe a good thing.

When the shell executes a command, the history list gets reordered in a rather non-intuitive way. |

||

ifconfig

ifconfig

| Synopsis | ||

| ifconfig | List IP address assignments for all network devices | |

| ifconfig | <device> | List IP address assignments for one network device |

| ifconfig | <device> <ipAddress> [ <subnetMask> ] | Assign an IP address to a network device |

|

Details | ||

|

The ifconfig command is used for assigning IP addresses to network devices, and printing network address bindings.

The first form prints the MAC address, assigned IP address(es) and MTU for all network devices. You should see the "loopback" device in addition to devices corresponding to each of your machine's ethernet cards. The second form prints the assigned IP address(es) for the given <device>. The final form assigns the supplied IP address and associated subnet mask to the given <device>. |

||

|

Bugs | ||

|

Only IPv4 addresses are currently supported.

When you attempt to bind an address, the output shows the address as "null", irrespective of the actual outcome. Run "ifconfig <device>" to check that it succeeded |

||

java

java

| Synopsis | ||

| java | <className> [ <arg> ... ] | run a Java class via its 'main' method |

|

Details | ||

| The java command runs the supplied class by calling its 'public static void main(String[])' entry point. The <className> should be the fully qualified name of a Java class. The java command will look for the class to be run on the current shell's classpath. If that fails, it will look in the current directory. The <arg> list (if any) is passed to the 'main' method as a String array. | ||

kdb

kdb

| Synopsis | ||

| kdb | show the current kdb state | |

| kdb | --on | turn on kdb |

| kdb | --off | turn off kdb |

|

Details | ||

|

The kdb command allows you to control "kernel debugging" from the command line. At the moment, the kernel debugging functionality is limited to copying the output produced by the light-weight "org.jnode.vm.Unsafe.debug(...)" calls to the serial port. If you are running under VMWare, you can configure it to capture this in a file in the host OS.

The kdb command turns this on and off. Kernel debugging is normally off when JNode boots, but you can alter this with a bootstrap switch. |

||

leed, levi

leed & levi

| Synopsis | ||

| leed | <filename> | edit a file |

| levi | <filename> | view a file |

|

Details | ||

|

The leed and levi command respectively edit and view the text file given by the <filename> argument. These commands open the editor in a new text console, and provide simple intuitive screen-based editing and viewing.

The leed command understands the following control functions:

The levi command understands the following control function:

|

||

|

Bugs | ||

| These commands need more comprehensive user documentation. | ||

loadkeys

loadkeys

| Synopsis | ||

| loadkeys | print the current keyboard interpreter | |

| loadkeys | <country> [ <language> [<variant> ] ] | change the keyboard interpreter |

| Details | ||

|

The loadkeys command allows you to change the current keyboard interpreter. A JNode keyboard interpreter maps device specific codes coming from the physical keyboard into device independent keycodes. This mapping serves to insulate the JNode operating system and applications from the fact that keyboards designed for different countries have different keyboard layouts and produce different codes.

A JNode keyboard interpreter is identified by a triple consisting of a 2 character ISO country code, together with an optional 2 character ISO language code and an optional variant identifier. Examples of valid country codes include "US", "FR", "DE", and so on. Examples of language code include "en", "fr", "de" and so on. (You can use JNode completion to get complete lists of the codes. Unfortunately, you cannot get the set of supported triples.) When you run "loadkeys <country> ..., the command will attempt to find a keyboard interpreter class that matches the supplied triple. These classes are in the "org.jnode.driver.input.i10n" package, and should be part of the plugin with the same identifier. If loadkeys cannot find an interpreter that matches your triple, try making it less specific; i.e. leave out the <language> and <variant> parts of the triple. Note: JNode's default keyboard layout is given by the "org/jnode/shell/driver/input/KeyboardLayout.properties" file. (The directory location in the JNode source code tree is "core/src/driver/org/jnode/driver/input/".) |

||

| Bugs | ||

|

Loadkeys should allow you to find out what keyboard interpreter are available without looking at the JNode source tree or plugin JAR files.

Loadkeys should allow you to set the keyboard interpreter independently for each connected keyboard. Loadkeys should allow you to change key bindings at the finest granularity. For example, the user should be able to (say) remap the "Windows" key to "Z" to deal with a broken "Z" key. This would allow you to configure JNode to use a currently unsupported keyboard type. (It would also help those game freaks out there who have been pounding on the "fire" key too much.) |

||

locale

locale

| Synopsis | ||

| locale | print the current default Locale | |

| locale | --list | -l | list all available Locales |

| locale | <language> [ <country> [<variant> ] ] | change the default Locale |

| Details | ||

| The locale command allows you to print, or change JNode's default Locale, or list all available Locales. | ||

log4j

console

| Synopsis | ||

| log4j | --list | -l | list the current log4j Loggers |

| log4j | <configFile> | reloads log4j configs from a file |

| log4j | --url | -u <configURL> | reloads log4j configs from a URL |

| log4j | --setLevel | -s <level> [ <logger> ] | changes logging levels |

|

Details | ||

|

The log4j command manages JNode's log4j logging system. It can list loggers and logging levels, reload the logging configuration and adjust logging levels.

The first form of the log4j command list the currently defined Loggers and there explicit or effective logging levels. An effective level is typically inherited from the "root" logger, and is shown in parentheses. The second and third forms of the log4j command reload the log4j configurations from a file or URL. The final form of the log4j command allows you to manually change logging levels. You can use completion to see what the legal logging levels and the current logger names are. If no <logger> argument is given, the command will change the level for the root logger. |

||

ls

ls

| Synopsis | ||

| ls | [ <path> ... ] | list files and directories |

| Details | ||

| The ls command lists the files and/or directories given by the <path> arguments. If no arguments are provided, the current directory is listed. | ||

| Bugs | ||

| The current output format for 'ls' does not clearly distinguish between an argument that is a file and one that is a directory. A format that looks more like the output for UNIX 'ls' would be better. | ||

lsirq

lsirq

| Synopsis | ||

| lsirq | print IRQ handler information | |

|

Details | ||

| The lsirq command prints interrupt counts and device names for each IRQ. | ||

memory

locale

| Synopsis | ||

| memory | show JNode memory usage | |

| Details | ||

| The memory command shows how much JNode memory is in use and how much is free. | ||

mkdir

mkdir

| Synopsis | ||

| mkdir | <path> | create a new directory |

| Details | ||

| The mkdir command creates a new directory. All directories in the supplied path must already exist. | ||

mount

mount

| Synopsis | ||

| mount | show all mounted file systems | |

| mount | <device> <directory> <fsPath> | mount a file system |

|

Details | ||

|

The mount command manages mounted file systems. The first form of the command shows all mounted file systems showing the mount points and the device identifiers.

The second form of the command mounts a file system. The file system on <device> is mounted as <directory>, with <fsPath> specifying the directory in the file system being mounted that will be used as the root of the file system. Note that the mount point given by <directory> must not exist before mount is run. (JNode mounts the file system as the mount point, not on top of it as UNIX and Linux do.) |

||

namespace

locale

| Synopsis | ||

| namespace | Print the contents of the system namespace | |

| Details | ||

| The namespace command shows the contents of the system namespace. The output gives the class names of the various managers and services in the namespace. | ||

netstat

netstat

| Synopsis | ||

| netstat | Print network statistics | |

|

Details | ||

| The netstat command prints address family and protocol statistics gathered by JNode's network protocol stacks. | ||

onheap

onheap

| Synopsis | ||

| onheap | [--minCount <count>] [--minTotalSize <size>] [--className <size>]* | Print per-class heap usage statistics |

| Details | ||

|

The onheap command scans the heap to gather statistics on heap usage. Then it outputs a per-class breakdown, showing the number of instances of each class and the total space used by those instances.

When you run the command with no options, the output report shows the heap usage for all classes. This is typically too large to be directly useful. If you are looking for statistics for specific classes, you can pipe the output to the grep command and select the classes of interest with a regex. If you are trying to find out what classes are using a lot of space, you can use the onheap command's options to limit the output as follows:

|

||

page

page

| Synopsis | ||

| page | [ <file> ] | page a file |

| page | page standard input | |

| Details | ||

|

The page command displays the supplied file a screen page at a time on a new virtual console. If no arguments are provided, standard input is paged.

The command uses keyboard input to control paging. For example, a space character advances one screen page and ENTER advances one line. Enter 'h' for a listing of the available pager commands and actions. |

||

| Bugs | ||

|

While the current implementation does not pre-read an input stream, nothing will be displayed until the next screen full is available. Also, the entire contents of the file or input stream will be buffered in memory.

A number useful features supported by typical 'more' and 'less' commands have not been implemented yet. |

||

ping

ping

| Synopsis | ||

| ping | <host> | Ping a remote host |

|

Details | ||

| The ping command sends ICMP PING messages to the remote host given by <host> and prints statistics on the replies received. Pinging is a commonly used technique for testing that a remote host is contactable. However, ping "failure" does not necessarily mean that the machine is uncontactable. Gateways and even hosts are often configured to silently block or ignore PING messages. | ||

|

Bugs | ||

| The ping command uses hard-wired parameters for the PING packet's TTL, size, count, interval and timeout. These should be command line options. | ||

plugin

plugin

| Synopsis | ||

| plugin | List all plugins and their status | |

| plugin | <plugin> | List a given plugin |

| plugin | --load | -l <plugin> [ <version> ] | Load a plugin |

| plugin | --unload | -u <plugin> | Unload a plugin |

| plugin | --reload | -r <plugin> [ <version> ] | Reload a plugin |

| plugin | --addLoader | -a <url> | Add a new plugin loader |

|

Details | ||

|

The plugin command lists and manages plugins and plugin loaders.

The no argument form of the command lists all plugins known to the system, showing each one's status. The one argument form lists a single plugin. The --load, --unload and --reload options tell the plugin command to load, unload or reload a specified plugin. The --load and --reload forms can also specify a version of the plugin to load or reload. The --addLoader option configures a new plugin loader that will load plugin from the location given by the <url>. |

||

propset

propset

| Synopsis | ||

| propset | [ -s | --shell ] <name> [ <value> ] | Set or remove a property |

|

Details | ||

|

The propset command sets and removes properties in either the System property space or (if -s or --shell is used) Shell property space. If both <name> and <value> are supplied, the property <name> is set to the supplied <value>. If just <name> is given, the named property is removed.

The System property space consists of the properties returned by "System.getProperty()". This space are currently isolate-wide, but there are moves afoot to make it proclet specific. The Shell property space consists of properties stored by each Shell instance. This space is is separate from an shell interpreter's variable space, and persists over changes in a Shell's interpreter. The 'set' command is an alias for 'propset', but if you are using the 'bjorne' interpreter the 'set' alias is obscured by the POSIX 'set' builtin command which has incompatible semantics. Hence 'propset' is the recommended alias. |

||

pwd

pwd

| Synopsis | ||

| pwd | print the pathname for current directory | |

|

Details | ||

| The pwd command prints the pathname for the current directory; i.e. the value of the System "user.dir" property mapped to an absolute pathname. Note that the current directory is not guaranteed to exist, or to ever have existed. | ||

ramdisk

ramdisk

| Synopsis | ||

| ramdisk | -c | --create [ -s | --size <size> ] | |

| Details | ||

|

The ramdisk command manages RAM disk devices. A RAM disk is a simulated disk device that uses RAM to store its state.

The --create form of the command creates a new RAM disk with a size in bytes given by the --size option The default size is 16K bytes. Note that the RAM disk has a notional block size of 512 bytes, so the size should be a multiple of that. |

||

reboot

reboot

| Synopsis | ||

| reboot | shutdown and reboot JNode | |

|

Details | ||

| The reboot command shuts down JNode services and devices, and then reboots the machine. | ||

remoteout

remoteout

| Synopsis | ||

| remoteout | [--udp | -u] --host | -h <host> [--port | -p <port>] | Copy console output and logging to a remote receiver |

| Details | ||

Running the remoteout command tells the shell to copy console output (both 'out' and 'err') and logger output to a remote TCP or UDP receiver. The options are as follows:

Before you run remoteout on JNode, you need to start a TCP or UDP receiver on the relevant remote host and port. The JNode codebase includes a simple receiver application implemented in Java. You can run as follows: java -cp $JNODE/core/build/classes org.jnode.debug.RemoteReceiver & Running the RemoteReceiver application with the --help option will print out a "usage" message. Notes:

|

||

| Bugs | ||

|

In addition to the inherent lossiness of UDP, the UDPOutputStream implementation can discard output arriving simultaneously from multiple threads.

Logger output redirection is disabled in TCP mode due to a bug that triggers kernel panics. There is currently no way to turn off console/logger copying once it has been started. Running remoteout and a receiver on the same JNode instance, may cause JNode to lock up in a storm of console output. |

||

resolver

resolver

| Synopsis | ||

| resolver | List the DNS servers the resolver uses | |

| resolver | --add | -a <ipAddr> | Add a DNS server to the resolver list |

| resolver | --del | -d <ipAddr> | Remove a DNS server from the resolver list |

| Details | ||

|

The resolver manages the list of DNS servers that the Resolver uses to resolve names of remote computers and services.

The zero argument form of resolver list the IP addresses of the DNS servers in the order that they are used. The --add form adds a DNS aerver (identified by a numeric IP address) to the front of the resolver list. The --del form removes a DNS server from the resolver list. |

||

route

route

| Synopsis | ||

| route | List the network routing tables | |

| route | --add | -a <target> <device> [ <gateway> ] | Add a new route to the routing tables |

| route | --del | -d <target> <device> [ <gateway> ] | Remove a route from the routing tables |

| Details | ||

|

The routing table tells the JNode network stacks which network devices to use to send packets to remote machines. A routing table entry consists of the "target" address for a host or network, the device to use when sending to that address, and optionally the address of the local gateway to use.

The route command manages the routing table. The no-argument form of the command lists the current routing table. The --add and --del add and delete routes respectively. For more information on how to use route to configure JNode networking, refer to the FAQ. |

||

rpcinfo

rpcinfo

| Synopsis | ||

| rpcinfo | <host> | Probe a remote host's ONC portmapper service |

|

Details | ||

| The rpcinfo command sends a query to the OMC portmapper service running on the remote <host> and lists the results. | ||

run

run

| Synopsis | ||

| run | <file> | Run a command script |

|

Details | ||

The run command runs a command script. If the script starts with a line of the form

#!<interpreter> where <interpreter> is the name of a registered CommandInterpreter, the script will be run using the nominated interpreter. Otherwise, the script will be run using the shell's current interpreter. |

||

startawt

startawt

| Synopsis | ||

| startawt | start the JNode Graphical User Interface | |

|

Details | ||

|

The startawt command starts the JNode GUI and launches the desktop class specified by the system property jnode.desktop. The default value is "org.jnode.desktop.classic.Desktop"

There is more information on the JNode GUI page, including information on how to exit the GUI. |

||

syntax

syntax

| Synopsis | ||

| syntax | lists all aliases that have a defined syntax | |

| syntax | --load | -l | loads the syntax for an alias from a file |

| syntax | --dump | -d | dumps the syntax for an alias to standard output | syntax | --dump-all | dumps all syntaxes to standard output |

| syntax | --remove | -r alias | remove the syntax for the alias |

|

Details | ||

|

The syntax command allows you to override the built-in syntaxes for commands that use the new command syntax mechanism. The command can "dump" a command's current syntax specification as XML, and "load" a new one from an XML file. It can also "remove" a syntax, provided that the syntax was defined or overridden in the command shell's syntax manager.

The built-in syntax for a command is typically specified in the plugin descriptor for a parent plugin of the command class. If there is no explicit syntax specification, a default one will be created on-the-fly from the command's registered arguments. Note: not all classes use the new syntax mechanism. Some JNode command classes use an older mechanism that is being phased out. Other command classes use the classic Java approach of decoding arguments passed via a "public static void main(String[])" entry point. |

||

|

Bugs | ||

| The XML produced by "--dump" or "--dump-all" should be pretty-printed to make it more readable / editable. | ||

tar

tar

| Synopsis |

| tar -Acdtrux [Options] [File ...] |

| Details |

| The tar program provides the ability to create tar archives, as well as various other kinds of manipulation. For example, you can use tar on previously created archives to extract files, to store additional files, or to update or list files which were already stored. |

| Compatibility |

| JNode tar aims to be fully compliant with gnu tar. |

| Links |

tcpinout

tcpinout

| Synopsis | ||

| tcpinout | <host> <port> | Run tcpinout in client mode |

| tcpinout | <local port> | Run tcpinout in server mode |

|

Details | ||

|

The tcpinout command is a test utility that sets up a TCP connection to a remote host and then connects the command's input and output streams to the socket. The command's standard input is read and sent to the remote machine, and simultaneously output from the remote machine is written to the command's standard output. This continues until the remote host closes the socket or a network error occurs.

In "client mode", the tcpinout command opens a connection to the supplied <host> and <port>. This assumes that there is a service on the remote host that is "listening" for connections on the port. In "server mode", the tcpinout command listens for an incoming TCP connection on the supplied <local port>. |

||

thread

thread

| Synopsis | ||

| thread | [--groupDump | -g] | Display info for all extand Threads |

| thread | <threadName> | Display info for the named Thread |

| Details | ||

|

The thread command can display information for a single Thread or all Threads that are still extant.

The first form of the command traverses the ThreadGroup hierarchy, displaying information for each Thread that it finds. The information displayed consists of the Thread's 'id', its 'name', its 'priority' and its 'state'. The latter tells you (for example) if the thread is running, waiting on a lock or exited. If the Thread has died with an uncaught exception, you will also see a stacktrace. If you set the --groupDump flag, the information is produced by calling the "GroupInfo.list()" debug method. The output contains more information but the format is ugly. The second form of the thread command outputs information for the thread given by the <threadName> argument. No ThreadGroup information is shown. |

||

| Bugs | ||

|

The output does not show the relationship between ThreadGroups unless you use --groupDump.

The second form of the command should set a non-zero return code if it cannot find the requested thread. There should be a variant for selecting Threads by 'id'. |

||

time

time

| Synopsis |

| time Alias [Args] |

| Details |

| Executes the command given by Alias and outputs the total execution time of that command. |

touch

cd

| Synopsis | ||

| touch | <filename> | create a file if it does not exist |

|

Details | ||

| The touch command creates the named file if it does not already exist. If the <filename> is a pathname rather than a simple filename, the command will also create parent directories as required. | ||

unzip

unzip

| Synopsis |

| unzip [Options] Archive [File ...] [-x Pattern] [-d Directory] |

| Details |

| The unzip program handles the extraction and listing of archives based on the PKZIP format. |

| Compatibility |

| JNode unzip aims to be compatible with INFO-Zip. |

| Links |

utest

utest

| Synopsis | ||

| utest | <classname> | runs the JUnit tests in a class. |

|

Details | ||

| The utest command loads the class given by <className> creates a JUnit TestSuite from it, and then runs the TestSuite using a text-mode TestRunner. The results are written to standard output. | ||

vminfo

vminfo

| Synopsis | ||

| vminfo | [ --reset ] | show JNode VM information |

|

Details | ||

| The vminfo command prints out some statistics and other information about the JNode VM. The --reset flag causes some VM counters to be zeroed after their values have been printed. | ||

wc

wc

| Synopsis |

| wc [-cmlLw] [File ...] |

| Details |

| print newline, word and byte counts for each file. |

| Compatibility |

| JNode wc is posix compatible. |

| Links |

zip

zip

| Synopsis |

| zip [Options] [Archive] [File ...] [-xi Pattern] |

| Details |

| The zip program handles the creation and modification of zip archives based on the PKZIP format. |

| Compatibility |

| JNode zip aims to be compatible with INFO-Zip. |

| Links |

JNode GUI

Starting the JNode GUI

JNode supports a GUI which runs a graphical desktop and a limited number of applications. The normal way to launch the JNode GUI is to boot JNode normally, and then at the console command prompt run the following:

JNode> gc

JNode> startawt

The screen will go blank for some time (30 to 60 seconds is common), and then the JNode desktop will be displayed.

Using the JNode GUI

The JNode GUI enables the following special key bindings:

| <ALT> + <CTRL> + <F5> | Refresh the GUI | |

| <ALT> + <F11> | Leaves the GUI | |

| <ALT> + <F12> | Quits the GUI | |

| <ALT> + <CTRL> + <BackSpace> | Quits the GUI | (Don't use this if you are under Linux/Unix : it will quits Linux GUI) |

Trouble-shooting

If the GUI fails to come up after a reasonable length of time, try using <ALT> + <F12> or <ALT> + <CTRL> + <BackSpace> to return to the text console. When you get back to the console, look for any relevant messages on the current console and on the logger console (<ALT> + <F7>).

One possible cause of GUI not launching is that JNode may run out of memory while compiling the GUI plugins to native code. If this appears to be the case and you are running a virtual PC (e.g. using VMware, etc), try changing the memory size of the virtual PC.

Another possible cause of problems may be that JNode doesn't have a working device driver for your PC's graphics card. If this is the case, you could try booting JNode in VESA mode. To do this, simply boot JNode selecting a "(VESA mode)" entry from the GRUB boot menu.

JNode Shell

Introduction

The JNode command shell allows commands to be entered and run interactively from the JNode command prompt or run from command script files. Input to entered at the command prompt (or read from a script file) is first split into command lines by a command interpreter; see below. Each command line is split into command name (an alias in JNode parlance) and a sequence of arguments. Finally, each command alias is mapped to a class name, and run by a command invoker.

The available aliases can be listed by typing

JNode /> alias<ENTER>

and an aliases syntax and built-in help can be displayed by typing

JNode /> help alias<ENTER>

More extensive documentation for most commands can be found in the JNode Commands index.

Keyboard Bindings

The command shell (or more accurately, the keyboard interpreter) implements the following keyboard events:

| <SHIFT>+<UP ARROW> | Scroll the console up a line |

| <SHIFT>+<DOWN-ARROW> | Scroll the console down a line |

| <SHIFT>+<PAGE-UP> | Scroll the console up a page |

| <SHIFT>+<PAGE-DOWN> | Scroll the console down a page |

| <ALT>+<F1> | Switch to the main command console |

| <ALT>+<F2> | Switch to the second command console |

| <ALT>+<F7> | Switch to the Log console (read only) |

| <ESC> | Show command usage message(s) |

| <TAB> | Command / input completion |

| <UP-ARROW> | Go to previous history entry |

| <DOWN-ARROW> | Go to next history entry |

| <LEFT-ARROW> | Move cursor left |

| <RIGHT-ARROW> | Move cursor right |

| <BACKSPACE> | Delete character to left of cursor |

| <DELETE> | Delete character to right of cursor |

| <CTRL>+<C> | Interrupt command (currently disabled) |

| <CTRL>+<D> | Soft EOF |

| <CTRL>+<Z> | Continue the current command in the background |

| <CTRL>+<L> | Clear the console and the input line buffer |

Note: you can change the key bindings using the bindkeys command.

Command Completion and Incremental Help

The JNode command shell has a sophisticated command completion mechanism that is tied into JNode's native command syntax mechanisms. Completion is performed by typing the <TAB> key.

If you enter a partial command name as follows.

JNode /> if

If you now enter <TAB> the shell will complete the command as follows:

JNode /> ifconfig

with space after the "g" so that you can enter the first argument. If you enter <TAB>

again, JNode will list the possible completions for the first argument as follows:

eth-pci(0,16,0)

loopback

JNode /> ifconfig

This is telling you that the possible values for the first argument are "eth-pci(0,16,0)" and "loopback"; i.e. the names of all network devices that are currently available. If you now enter "l" followed by <TAB>, the shell will complete the first argument as follows:

JNode /> ifconfig loopback

and so on. Completion can be performed on aliases, option names and argument types such as file and directory paths and device and plugin names.

While completion can be used to jolt your memory, it is often useful to be able to see the syntax description for the command you are entering. If you are in the middle of entering a command, entering <CTRL-?> will parse what you have typed in so far against the aliases syntax, and then print the syntax description for the alternative(s) that match what you have entered.

The JNode command shell uses a CommandInterpreter object to translate the characters typed at the command prompt into the names and arguments for commands to be executed. There are currently 3 interpreters available:

- "default" - this bare-bones interpreter splits a line into a simple command name (alias) and arguments. It understands argument quoting, but little else.

- "redirecting" - this interpreter adds the ability to use "<" and ">" for redirecting standard input and standard output, and "|" for simple command pipelines. This is the default interpreter.

- "bjorne" - this interpreter is an implementation of the POSIX shell specification; i.e. it is like UNIX / GNU Linux shells such as "sh", "bash" and "ksh". The bjorne interpreter is still under development. Refer to the bjorne tracking issue for a summary of implemented features and current limitations.

The JNode command shell currently consults the "jnode.interpreter" property to determine what interpreter to use. You can change the current interpreter using the "propset -s" command; e.g.

JNode /> propset -s jnode.interpreter bjorne

Note that this only affects the current console, and that the setting does not persist beyond the next JNode reboot.

Command Invokers

The JNode command shell uses a CommandInvoker object to execute commands extracted from the command line by the interpreter. This allows us to run commands in different ways. There are currently 4 command invokers available:

- "default" - this invoker runs the command in the current Java Thread.

- "thread" - this invoker runs the command in a new Java Thread.

- "proclet" - this invoker runs the command in a new Proclet. The proclet mechanism is a light-weight process mechanism that gives a degree of isolation, so that the command can have its own standard input, output and error streams. The command can also see the "environment" variables of the parent interpreter. This is the default invoker.

- "isolate" - this invoker runs the command in a new Isolate. The isolate mechanism gives the command its own statics, as if the command is executing in a new JVM. Isolates and the IsolateInvoker are not fully implemented.

The JNode command shell currently consults the "jnode.invoker" property to determine what invoker to use. You can change the current invoker using the "propset -s" command; e.g.

JNode /> propset -s jnode.invoker isolate

Note that this only affects the current console, and that the setting does not persist beyond the next JNode reboot.

Testing remote programs in shell

If you want to test some java application, but don't want to recompile JNode completly every time you change your application, you can use the classpath command.

Set up your network, if you don't know how, read the FAQ.

Now you have to setup a webserver or tftp server on your remote mashine, where you place your .class or .jar files.

With the classpath command you can now add a remote path. E.g. "classpath add http://192.168.0.1/path/to/classes/". Using "classpath" without arguments shows you the list of added paths. To start your application simply type the class file's name.

For more info read the original forum topic from Ewout, read more about shell commands or have a look at the following example:

On your PC:

Install a Webserver (e.g. Apache) and start it up. Let's say it has 192.168.0.1 as its IP. Now create a HelloWorld.java, compile it and place the HelloWorld.class in a directory of your Webserver, for me that is "/var/www/localhost/htdocs/jnode/".

Inside JNode:

Type the following lines inside JNode. You just have to replace IP addesses and network device by values matching your configuration.

ifconfig eth-pci(0,17,0) 192.168.0.6

route add 192.168.0.1 eth-pci(0,17,0)

classpath add http://192.168.0.1/jnode/

now that a new classpath is added you can run your HelloWorld App by simply typing

HelloWorld

Performance

Performance of an OS is critical. That's why many have suggested that an OS cannot be written in Java. JNode will not be the fastest OS around for quite some time, but it is and will be a proof that it can be done in Java.

Since release 0.1.6, the interpreter has been removed from JNode, so now all methods are compiled before being executed. Currently two new native code compilers are under development that will add various levels of optimizations to the current compiler. We expect these compilers to bring us much closer to the performance of Sun's J2SDK.

This page will keep track of performance of JNode, measured using various benchmarks, over time.

The performance tests are done on a Pentium4 2Ghz with 1GB of memory.

| ArithOpt, org.jnode.test.ArithOpt | Lower numbers are better. | ||

| Date | JNode Interpreted | JNode Compiled | Sun J2SDK |

| 12-jul-2003 | 1660ms | 108ms | 30ms |

| 19-jul-2003 | 1639ms | 105ms | 30ms |

| 17-dec-2003 | 771ms | 63ms | 30ms |

| 20-feb-2004 | n.a. | 59ms | 30ms |

| 03-sep-2004 | n.a. | 27ms* | 30ms |

| 28-jul-2005 | n.a. | 20ms* | 30ms** |

| Sieve, org.jnode.test.Sieve | Higher numbers are better. | ||

| Date | JNode Interpreted | JNode Compiled | Sun J2SDK |

| 12-jul-2003 | 53 | 455 | 5800 |

| 19-jul-2003 | 55 | 745 | 5800 |

| 17-dec-2003 | 158 | 1993 | 5800 |

| 20-feb-2004 | n.a. | 2002 | 5800 |

| 03-sep-2004 | n.a. | 4320* | 5800 |